- Blog Home

- News

- Boris Vassilev

- What Makes A Good Editor? Some Insights From Our Editor Test

What Makes a Good Editor? Some Insights From Our Editor Test

The written word remains one of the most important means of communicating and connecting, even in an age of rapidly advancing technology.

At Scripted.com, our goal is to improve the quality of written content on the Internet. However, there's more than just a compelling story that goes into creating an effective piece of writing: a good editing process can be just as influential as quality composition. For this reason, we've recently created an editor layer for all of our written work by vetting editors through an automated editing test.

The beta test, only available to Scripted.com's network of freelance writers, algorithmically analyzes the basic qualities of what we perceive as a good editor. Sarah Hadley, a Scripted.com engineer, and I developed the test's methodology based on the insights we learned from our writer English proficiency test and our own previous editing experiences.

We divided the test into two sections that we consider to be the most important aspects of editing: English semantic recognition (copyediting) and a deeper understanding of the writing assignment. The essay copyediting section measures an editor's ability to detect errors in the semantic elements of writing: grammar, spelling, punctuation, word choice and idiomatic errors. The multiple-choice section tests understanding of theme, text organization and prompt adherence.

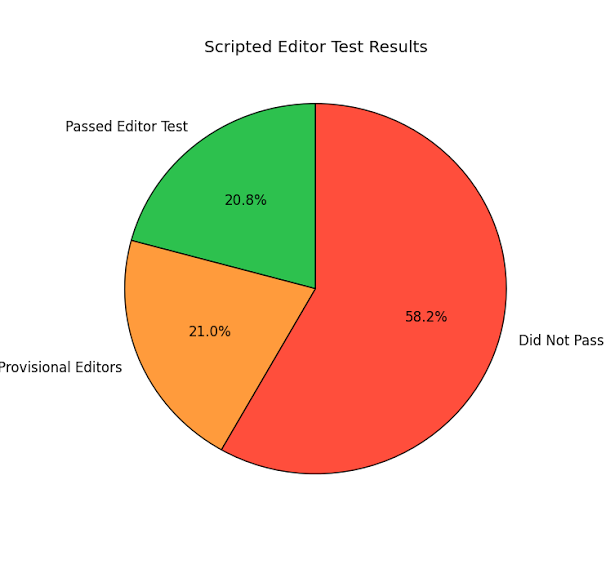

The insights we have so far imply that good writers aren't always great editors. As of today, of the 500 writers to take the test, 209 passed, and 291 did not. We measured the passing test scores using the performance of in-house copy editors.

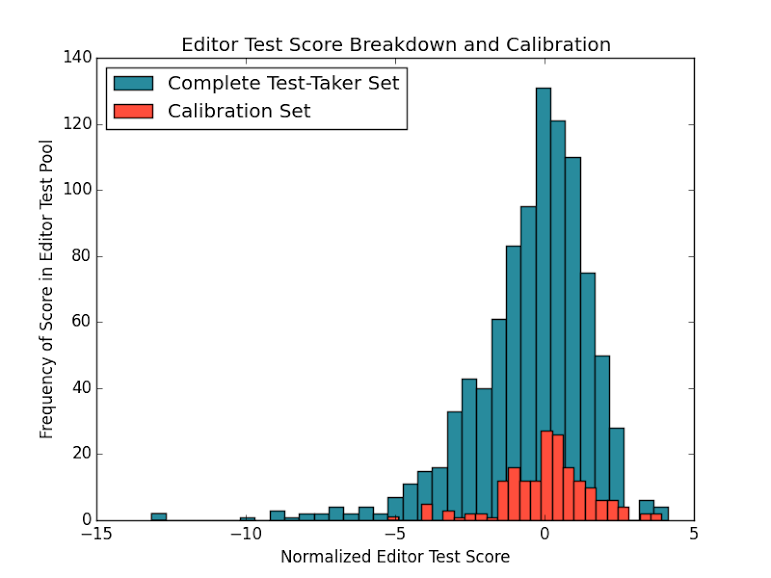

As you can see in the graph below, the test performance distribution in the prospective editor pool closely mirrors the shape of the calibration pool scores. This will help us accurately gauge how test takers will perform as compared to editors we've worked with in the past.

Beyond being just a filter for editors in our system, we designed the editor test to suss out more subtle aspects of the editing process -- for example, editor fatigue. We predicted that a test taker's performance would suffer later in the test as they grew tired. To test this, we randomized the order in which a test taker is presented with the test elements. We were surprised to find that copyediting performance actually increased with time for 75 percent of our test takers. Editors need a warm-up period -- time to get into their work groove -- and can effectively edit far longer pieces than we originally had predicted.

Average Scripted Editor Test Scores by State

Normalized Average Editor Test Scores

In the graph above we can see the geographic distribution of average editor test scores (the darker the blue, the higher the average score). This is one of several metrics being collected through the editor test that might be helpful down the road as we accrue more test results and continue to push the envelope in understanding what makes a good editor.

The editor test is a step in the direction of constantly improving the writing quality here at Scripted.com. Even in this early stage, it has already given us valuable insight into how editors perform. As more editors take the test, we will gain more perspective on our editor ecosystem.

Questions or comments? Say hello in the comments section below.

How Topic Pitching Works